Experimenting mcp-go, AnythingLLM and local LLM executions

Overview

Experimenting with MCP-Go, AnythingLLM and local executions

NOTE: I am no LLM, AI or whatever expert. Far from this. I have decided to write this blog post mostly as a way to track my progress and share my findings! There may be some mistakes here, please let me know!

I got hyped into the MCP trend. Usually I wait a bit more to get engaged into these subjects, but when it comes to cool integration between tools, I get excited to experiment fast.

MCP, as a single line explanation (from my own head) is “a way to integrate local data with LLM”. Mostly it allows me to connect some LLM that understands a question like “what’s the current weather in Sao Paulo”, providing an interface that receives a formatted query, requests informations from some API (but can also be a full local execution, like listing my filesystem) and provides a structured response to LLM back so it can be formatted.

It is, as its name says, a protocol that allows a developer to create an “API’ish”, queried in real time and providing answers based on the API response.

I have decided to use Golang SDK as it is my comfort zone, but the MCP site has a bunch of useful examples using whatever language you feel more comfortable with (and it also has its official SDKs in Python, NodeJS, etc).

Because I don’t have enough money to spend calling Claude (Anthropic, the original creator of MCP is also the company behind Claude), I have decided to use the very cool project called AnythingLLM that allows me to execute local models and also supports MCP.

The code used on this blog post is also available at Github

Concepts

Some concepts used here:

- MCP Server - AKA Agent AI - What we are implementing here. A “server” that will be called to provide responses

- MCP Client - A client that “knows” how to call a MCP server. In our case is AnythingLLM

- Resources - Our API responses, but can be local files, etc. Consumed by MCP Server, provided as response to MCP Client

- Tools - the “methods” that can be called by MCP Client. In our case you are going to see it as the

getForecast()function

Pre requisites

I will not cover how to install every piece, it should be straightforward. What you need is to install AnythingLLM and load a model. I am using Llama 3.2 3B, but if you need more complex operations, AnythingLLM allows you to select different models to execute locally.

The API used in this example is based on already existing example Weather MCP but mostly converted to Go. We cover just the forecast API, and not the Alerts one, but go ahead and try implementing your own :) it is fun!

The API definition is also available here

Getting started

First, we need to create a Go project with MCP Go library:

1go mod init weather-go

2go get github.com/mark3labs/mcp-go

Defining the API responses

The forecast API makes its responses as JSON. To make our life easier, only the parts we care from this API were extracted, so the definition looks like:

1// WeatherPoints contains the response for API/points/latitude,longitude

2// we care just about the Forecast field, that contains the new API URL that should be called

3type WeatherPoints struct {

4 Properties struct {

5 Forecast string `json:"forecast"`

6 } `json:"properties"`

7}

8

9// Forecast contains the forecast prediction for a lat,long.

10// example: https://api.weather.gov/gridpoints/MTR/85,105/forecast

11type Forecast struct {

12 Properties struct {

13 Periods []struct {

14 Name string `json:"name,omitempty"`

15 Temperature int `json:"temperature,omitempty"`

16 TemperatureUnit string `json:"temperatureUnit,omitempty"`

17 WindSpeed string `json:"windSpeed,omitempty"`

18 WindDirection string `json:"windDirection,omitempty"`

19 ShortForecast string `json:"shortForecast,omitempty"`

20 DetailedForecast string `json:"detailedForecast,omitempty"`

21 } `json:"periods,omitempty"`

22 } `json:"properties,omitempty"`

23}

Building the Forecast tool

The Forecast tool is how we expose to our client how/when the Forecast should be called. It requests for parameters like “a City name”, “a file location”, “what numbers should be suummed”.

The snippets below define the tool and the call execution. Later we will add it to the main MCP server.

Let’s take a look into the function:

1import (

2 "github.com/mark3labs/mcp-go/mcp"

3)

4// Add forecast tool

5func getForecast() mcp.Tool {

6 weatherTool := mcp.NewTool("get_forecast",

7 mcp.WithDescription("Get weather forecast for a location"),

8 mcp.WithNumber("latitude",

9 mcp.Required(),

10 mcp.Description("Latitude of the location"),

11 ),

12 mcp.WithNumber("longitude",

13 mcp.Required(),

14 mcp.Description("Longitude of the location"),

15 ),

16 )

17 return weatherTool

18}

When the MCP Client connects to a MCP Server, the MCP server exposes get_forecast as a valid tool, requesting latitute and longitude parameters.

Note: I don’t understand yet the magics between a prompt receiving a request like “give me the forecast of bla” and deferring to the get_forecast tool!

Setting the forecast tool is not enough. We need to define to our server, once a “get_forecast” call is made, what it should execute. First, we define a util function to call the API:

1const (

2 nws_api_base = "https://api.weather.gov"

3 user_agent = "weather-app/1.0"

4)

5func makeNWSRequest(requrl string) ([]byte, error) {

6 client := &http.Client{}

7 req, err := http.NewRequest(http.MethodGet, requrl, nil)

8 if err != nil {

9 return nil, err

10 }

11 req.Header.Set("User-Agent", user_agent)

12 req.Header.Set("Accept", "application/geo+json")

13

14 resp, err := client.Do(req)

15 if err != nil {

16 return nil, err

17 }

18 defer resp.Body.Close()

19 body, err := io.ReadAll(resp.Body)

20 if err != nil {

21 return nil, err

22 }

23 return body, nil

24}

Then we need the proper function that will make requests to the API, and format the response properly:

1const (

2 // We just want the next 5 forecast results

3 maxForecastPeriods = 5

4)

5func forecastCall(ctx context.Context, request mcp.CallToolRequest) (*mcp.CallToolResult, error) {

6 // Fetches latitude and longitude passed to the tool call

7 latitude := request.Params.Arguments["latitude"].(float64)

8 longitude := request.Params.Arguments["longitude"].(float64)

9

10 // Makes the API call.

11 pointsURL := fmt.Sprintf("%s/points/%.4f,%.4f", nws_api_base, latitude, longitude)

12 points, err := makeNWSRequest(pointsURL)

13 if err != nil {

14 return nil, fmt.Errorf("error calling points API: %w", err)

15 }

16 pointsData := &WeatherPoints{}

17 if err := json.Unmarshal(points, pointsData); err != nil {

18 return nil, fmt.Errorf("error unmarshalling points data: %w", err)

19 }

20

21 if pointsData == nil || pointsData.Properties.Forecast == "" {

22 return nil, fmt.Errorf("points does not contain forecast url")

23 }

24

25 // Make a second API call with the Forecast URL to get the forecast result

26 forecastReq, err := makeNWSRequest(pointsData.Properties.Forecast)

27 if err != nil {

28 return nil, fmt.Errorf("error getting the forecast data")

29 }

30

31 forecastData := &Forecast{}

32 if err := json.Unmarshal(forecastReq, forecastData); err != nil {

33 return nil, fmt.Errorf("error unmarshalling points data: %w", err)

34 }

35

36 type ForecastResult struct {

37 Name string `json:"Name"`

38 Temperature string `json:"Temperature"`

39 Wind string `json:"Wind"`

40 Forecast string `json:"Forecast"`

41 }

42

43 // format a json containing the 5 forecasts

44 forecast := make([]ForecastResult, maxForecastPeriods)

45 for i, period := range forecastData.Properties.Periods {

46 forecast[i] = ForecastResult{

47 Name: period.Name,

48 Temperature: fmt.Sprintf("%d°%s", period.Temperature, period.TemperatureUnit),

49 Wind: fmt.Sprintf("%s %s", period.WindSpeed, period.WindDirection),

50 Forecast: period.DetailedForecast,

51 }

52 if i >= maxForecastPeriods-1 {

53 break

54 }

55 }

56

57 forecastResponse, err := json.Marshal(&forecast)

58 if err != nil {

59 return nil, fmt.Errorf("error marshalling forecast: %w", err)

60 }

61

62 // And return it as string back to the caller function

63 return mcp.NewToolResultText(string(forecastResponse)), nil

64}

Defining the main server

With everything in place, we can define the main server, add our tool and the “handler” function for that tool:

1import (

2 "github.com/mark3labs/mcp-go/server"

3)

4func main() {

5 // Create a new MCP server

6 s := server.NewMCPServer(

7 "Weather Demo",

8 "1.0.0",

9 server.WithResourceCapabilities(true, true),

10 server.WithLogging(),

11 server.WithRecovery(),

12 )

13

14 // Add the forecast tool and its handler function

15 s.AddTool(getForecast(), forecastCall)

16

17 // Start the server

18 if err := server.ServeStdio(s); err != nil {

19 fmt.Printf("Server error: %v\n", err)

20 }

21}

With that in place, we can try simply building and executing our Go program. It should give us any return, and wait for inputs on STDIO. After that, interrupt it with Ctrl-C

1go build -o forecast .

2./forecast

Integrating with AnythingLLM

The integration with AnythingLLM is made defining the MCP server binary that we want to call. It is a JSON config, and its schema follows the same definition for Claude Desktop.

The configuration location may vary from each OS, on MacOS you define it on ~/Library/Application\ Support/anythingllm-desktop/storage/plugins/anythingllm_mcp_servers.json.

Following is how mine is defined:

1{

2 "mcpServers": {

3 "weather": {

4 "command": "/Users/rkatz/codes/mcp/weather-go/forecast"

5 }

6 }

7}

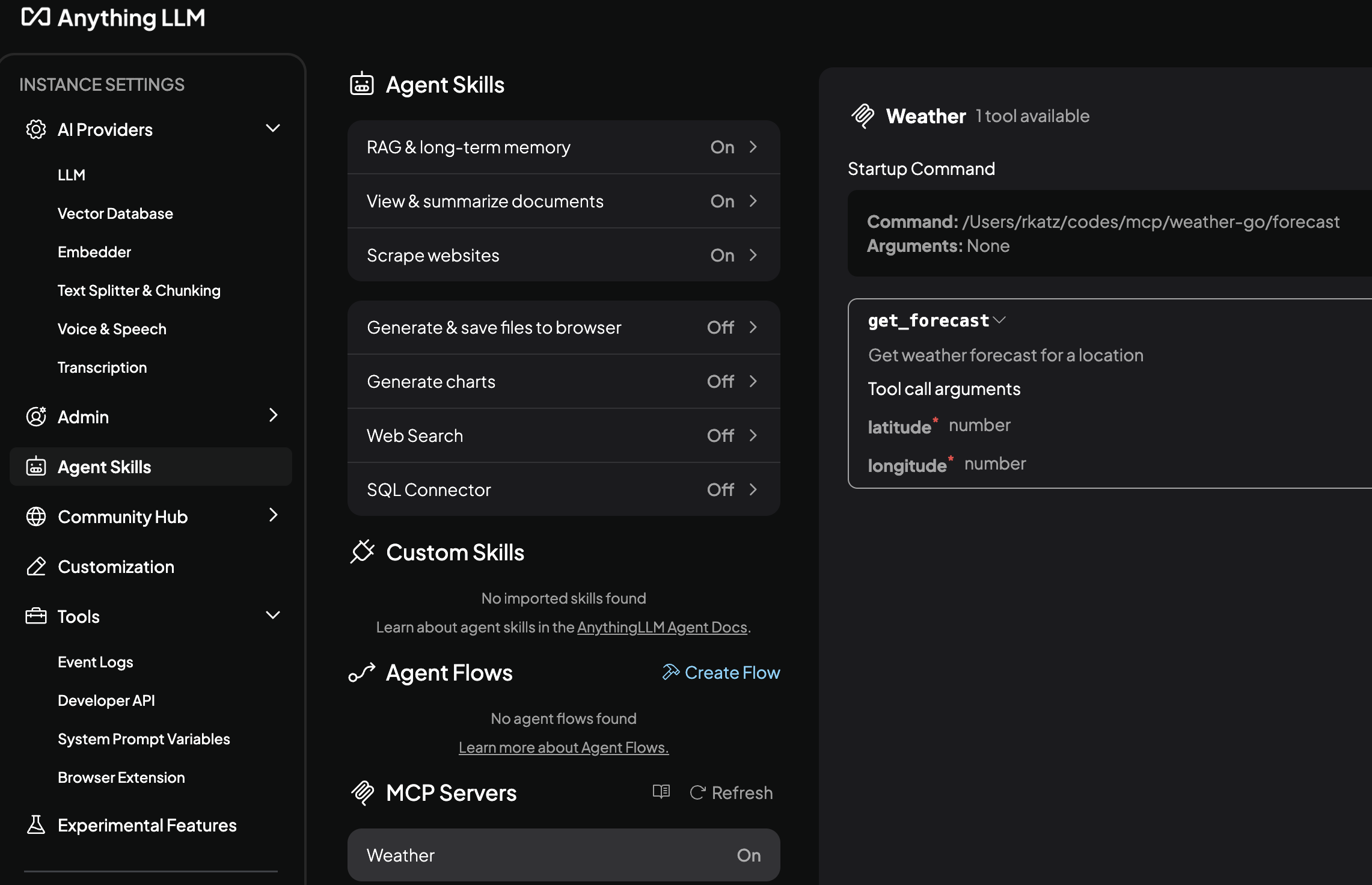

Once it is defined, we can verify if it is properly working on the UI:

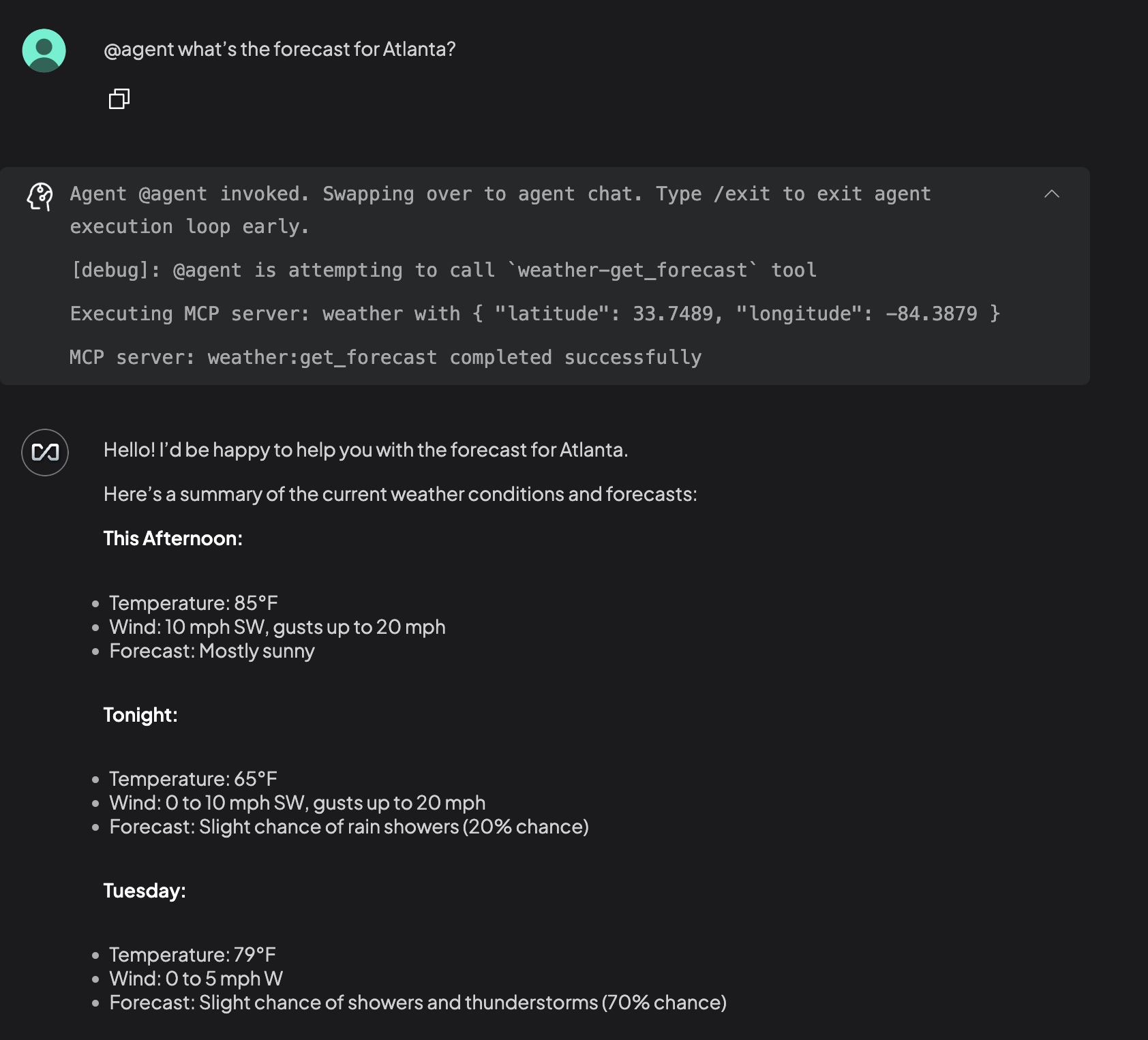

As we can see it is working, we can make a prompt call like:

1@agent what's the forecast for Atlanta?

It is important to use the @agent on the beginning of the Prompt. AnythingLLM requires

this to understand this should be sent to MCP Server.

With that, we can see that AnythingLLM deferred the call to MCP Server, made the call and formatted the output.

Debugging

Sometimes things may go wrong. The most common mistake I have faced is Wrong command call on AnythingLLM configuration.

If the prompt answers there’s some error on the Agent/MCP Server, you can request for the prompt

for some more information, like Can you print the full error or can you print the Json response from Server

and it should print some useful information.

Acknowledges

My acknowledge here goes to my friend Anderson Duboc, that helped me start scratching this surface with all the patience that I require! :)

Also, for the folks who created this amazing SDK and saved me a lot of time.